| | Augmented reality (AR) is a technology that will become the primary means of interacting with digital devices over the next decade. AR will revolutionize the labor workspace just as the personal computer revolutionized the office workspace in the 1980s. Frontline labor workers using AR are empowered with information technology pertinent to their job directly within the context of their job.

Augmented reality (AR) displays a “layer” of digital information over the user’s view of the physical objects around them. AR displays relevant information for the user. The information appears as a real part of the user’s physical surroundings. This digitally produced, synthetic “layer” augments the user’s experience of reality. AR is primarily a visual experience, but audio is often provided to enhance the experience. AR mixes digital content into reality. “Mixed reality” is sometimes used interchangeably with AR.

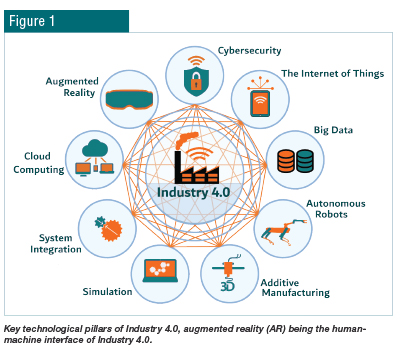

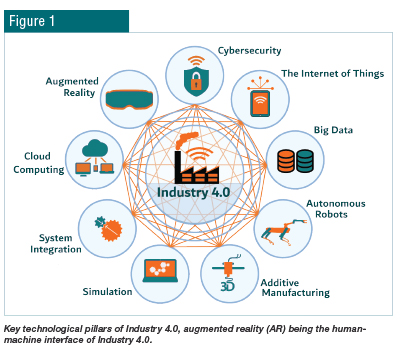

AR is one of nine key technological pillars supporting Industry 4.0, the fourth subrevolution of the Industrial Revolution.

AR is already in the beginning stages of fulfilling its role as the human-machine interface (HMI) of Industry 4.0. Many consumer electronics users have already seen and even used AR apps. Most consumer mobile phones, tablets and portable PCs have already incorporated AR-capable software modules within the operating system code (e.g., Apple’s ARKit, Android’s ARCore, etc.). Many major electronics original equipment manufacturers (OEMs) are developing wearable devices, like spectacles, as the user HMI. These new devices are different and superior to the awkward Google Glass product many may recall from 2013–2014, which, in fact, was not a formal AR device but merely a contextual display.

HISTORYThe term “augmented reality” was coined in 1990 by a researcher at Boeing but, as with most technologies, AR’s backstory is long and nuanced. AR shares its beginnings with its cousin, virtual reality (VR).

It is important to address these two related but distinct technologies. The easiest way to differentiate between AR and VR is to focus on the key objectives of the technologies: Virtual reality’s goal is to replace the user’s reality with a synthetic, virtual experience. Augmented reality’s goal is to enhance the user’s reality by presenting useful information within the context of the user’s physical reality.

It is generally accepted we humans have five basic senses: touch, sight, hearing, smell and taste.

A “perfect” VR would be a difficult task: it’s not easy to create synthetic versions of touch and taste. To keep things more simple and practical, we’ll focus on the visual stimuli of the user. Contemporary VR replaces the user’s reality with a synthetic, computer-generated environment. This is typically done using a head-mounted display (HMD) with a stereoscopic view: one screen for each eye. As explained earlier, AR augments the user’s view of reality with a layer of data appearing to be part of reality, enhancing situational awareness of objects within reality. In short, VR intends to replace reality while AR aims to enhance it.

The hardware and software of AR and VR have the same roots. In 1968, a team from the Massachusetts Institute of Technology developed the first HMD used for displaying computer-generated graphics. From this point, VR and AR evolved. In simplest terms, executing AR is more difficult than VR. AR must combine computer graphics with the physical objects around the user and the graphics should appear to remain in place, proportional and as real as those physical objects. VR’s relatively simple task is to track the view of the user and present the correct computer-generated view relative to the user’s movements.

Since the 1970s, the U.S. military has been using various AR systems to enhance situational understanding and performance while viewing reality, e.g., heads-up displays (HUDs) and HMDs. Notably, the U.S.’s newest fighter jet, the F-35, is the first modern fighter to rely solely on the F-35’s helmet-based AR HMD.

Sports and media have employed AR since the 1990s, as hardware has become capable of combining computer graphics with reality. Some examples when viewing a sporting event on TV are lines presented on the field showing ball position, important zones and even advertising. These elements appear as if they are anchored to the field despite the camera or players moving about them.

30 March 2016 was a major milestone in premium-grade AR hardware being available to the consumer market. On that day, Microsoft released its first version of the HoloLens. Most consider this device the first practical AR consumer electronics HMD. The HoloLens is able to sense and track the physical surroundings of the user and project graphics correctly in the user’s view relative to those surrounding objects. Since then, Microsoft and other OEMs have launched and announced plans for ever more sophisticated devices. Within the next 2–5 years these HMDs will replicate the smartphone’s trajectory of reduced size and cost but with huge advances in capability. TECHNOLOGYAs explained, AR makes digital information appear real within the user’s reality. Oversimplifying for clarity, there are two ways devices mix digital information with reality. Smartphones and tablets mix the digital layer with video of the physical elements using the device’s camera. The digital information is aligned with the video feed and the mixed information is presented as one on the handheld’s display screen. Alternatively, the HoloLens, the MagicLeap One and most other HMDs use sensors to measure space around the user. This spatial mapping information is supplied to hardware that calculates the size and position of digital elements before presenting this information to the user’s eyes using transparent optical display elements (e.g., wave guides). The user sees a 3D stereoscopic view of the digital information mixed over reality. The HMD’s spatial mapping sensor array tracks location as the user moves through space. This allows processors to adjust the size and position of the digital elements to appear as part of reality. In these ways, AR provides useful information for equipment and other parts of reality.

AR systems typically use the ubiquitous client server model most other networked electronics utilize. User devices (“clients”) running client software communicate, via the internet, with a central hub computer (“server”) running the server version of the software. Some AR systems have “offline modes” that allow client devices to function during a job without an internet connection. Before and after the offline job, the device can be synchronized with the server, so the offline information can be downloaded and the data generated offline by the client device can be uploaded for proper documentation. AR systems typically use the ubiquitous client server model most other networked electronics utilize. User devices (“clients”) running client software communicate, via the internet, with a central hub computer (“server”) running the server version of the software. Some AR systems have “offline modes” that allow client devices to function during a job without an internet connection. Before and after the offline job, the device can be synchronized with the server, so the offline information can be downloaded and the data generated offline by the client device can be uploaded for proper documentation. APPLICATION WITHIN THE STEEL INDUSTRYThe metallurgical industry is facing a serious challenge of the growing gulf between a well-trained, experienced, aging workforce and a shortage of incoming workers. To make matters worse, the incoming workers typically have a significant skills gap, which has seemed to broaden over time. The average steelworker’s age is increasing while lean staffing head counts are providing fewer opportunities to transfer knowledge from the more experienced to those less so. Operational workflow art is being lost while skill gaps and productivity pressures are increasing. Workflow art is the precious knowledge and ability to execute a sequence of actions correctly and safely to complete a task or job. The collection of workflow art within an organization can be summarized as tribal knowledge: a unique combination of plant equipment, technique development, resource constraints and other details which reflect geographic culture, management style, and company mindset. Human resource trends are threatening metallurgical plant performance and their loss of tribal knowledge. AR systems will help.

Frontline labor workers using AR are empowered with information technology pertinent to their job. Most industrial workflows require specific procedures not intuitively obvious and require training. Even with proper training, many tasks are sufficiently complex, done infrequently or have critical consequences if done improperly that the worker must take steps to ensure safe success. These steps may include arcane or even obsolete reference manuals, asking the most experienced person for advice, hiring specialized outside contractors to complete the task and other time-consuming, expensive options. Furthermore, even the most experienced humans make mistakes with lapses of concentration, memory or distraction.

Workflow safety and productivity benefit from detailed information, nuance, technique and experience: the tribal knowledge. It’s even better when that knowledge is presented visually directly in the context of the job. AR revolutionizes the frontline, “blue collar” workspace by providing as much detail of tribal knowledge and workflow art as is necessary to mitigate errors and optimize safety. Productivity and quality yield resulting in optimized profit are the ultimate outcomes. Workflow safety and productivity benefit from detailed information, nuance, technique and experience: the tribal knowledge. It’s even better when that knowledge is presented visually directly in the context of the job. AR revolutionizes the frontline, “blue collar” workspace by providing as much detail of tribal knowledge and workflow art as is necessary to mitigate errors and optimize safety. Productivity and quality yield resulting in optimized profit are the ultimate outcomes.

IMPLEMENTATION

Metallurgical companies have begun to adopt various AR systems to digitalize their tribal knowledge and workflows enabling less experienced workers immediate access to digitalized workflows and system data. The constant march of wearable hardware will unleash ever-more impressive capability, enabling metallurgical companies to digitalize knowledge from experienced workers before they retire from the company, store that digital knowledge on servers, and deploy knowledge as needed.

For example, a steel plant producing automotive galvanized sheet has demonstrated the benefit of AR in their operation. They have shown that new workers can successfully and safely complete critical operations requiring precise technique without needing training from an experienced operator. The experienced human trainer is required only to execute the job one time while recording — “authoring” — the job into the AR system. Once the job has been authored, stepwise, with all the detail and nuance, any worker can replicate it while using the AR system.

Reprofiling sheet welder copper wheels is one such job. If the profile of the copper wheel is wrong, there is great risk of the weld breaking as it passes through the line. A weld break forces the galvanizing line to stop for at least a few hours — sometimes as much as 1–2 days if the break occurs in the furnace and/or equipment is damaged by the loose steel sheet. Therefore, the apparently trivial task of machining a clean and uniform trapezoidal cross-section on the perimeter of the small copper wheels is a critical task.

The company’s expert of 25+ years used approximately one hour to author his workflow art into an AR system. From this moment, the expert’s time is done. His knowledge has been recorded for any number of new people to replicate his expert, stepwise technique. The day after the expert authored his work, a young, untrained person followed the expert’s technique using only the AR system. The expert was present only to verify the AR system’s ability to convey information to the new person. The new person was never helped nor asked for help as they followed the steps authored by the expert and the job was done perfectly as verified by the expert. This demonstration and others convinced the steel company to implement the AR system. Unfortunately, this decision was made shortly before the COVID-19 pandemic, so progress has been stalled for now. Ironically, a technology that would enable workers to work socially distanced could not be implemented before social distancing made training and deployment impossible.

|

AR systems typically use the ubiquitous client server model most other networked electronics utilize. User devices (“clients”) running client software communicate, via the internet, with a central hub computer (“server”) running the server version of the software. Some AR systems have “offline modes” that allow client devices to function during a job without an internet connection. Before and after the offline job, the device can be synchronized with the server, so the offline information can be downloaded and the data generated offline by the client device can be uploaded for proper documentation.

AR systems typically use the ubiquitous client server model most other networked electronics utilize. User devices (“clients”) running client software communicate, via the internet, with a central hub computer (“server”) running the server version of the software. Some AR systems have “offline modes” that allow client devices to function during a job without an internet connection. Before and after the offline job, the device can be synchronized with the server, so the offline information can be downloaded and the data generated offline by the client device can be uploaded for proper documentation. Workflow safety and productivity benefit from detailed information, nuance, technique and experience: the tribal knowledge. It’s even better when that knowledge is presented visually directly in the context of the job. AR revolutionizes the frontline, “blue collar” workspace by providing as much detail of tribal knowledge and workflow art as is necessary to mitigate errors and optimize safety. Productivity and quality yield resulting in optimized profit are the ultimate outcomes.

Workflow safety and productivity benefit from detailed information, nuance, technique and experience: the tribal knowledge. It’s even better when that knowledge is presented visually directly in the context of the job. AR revolutionizes the frontline, “blue collar” workspace by providing as much detail of tribal knowledge and workflow art as is necessary to mitigate errors and optimize safety. Productivity and quality yield resulting in optimized profit are the ultimate outcomes.